What is Loki?

Publicly released by Grafana Labs during KubeCon Seattle 2018, Loki is a logging backend suggested for clients running Prometheus and Kubernetes with a huge number of logs search and visualizations in Grafana 6.0.

Loki was designed to be productive while being affordable, easy to use, highly scalable (horizontally), and indexes a set of labels for each log stream. It facilitates easy switching between metrics and logs on Kubernetes-based labels which are in use with Prometheus.

How Loki is Augmenting Cloud DataVision?

Metadata among metrics and logs coordinating is basic and was at first chosen to simply target Kubernetes. The thought is to run a log-assortment operator on every hub, gather logs utilizing that, converse with the Kubernetes API to make sense of the correct metadata for the logs, and send them to the right service that can be utilized to show the logs through the DataVision.

The logs are ingested by means of the API and a specialist, called Promtail, will extract Kubernetes logs and include name metadata before sending it to Loki. This metadata expansion is actually equivalent to Prometheus, so you will wind up with precisely the same names for your assets.

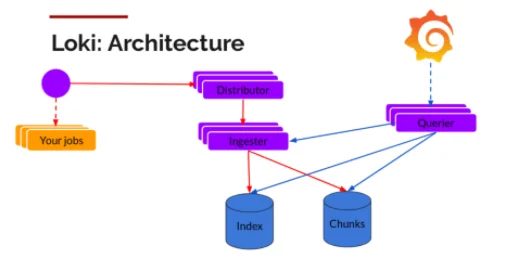

Distributor

Once Promtail gathers and sends the logs to Loki, the Distributor is the main segment to get them. Presently we could be accepting a huge number of writes every second. Hence, they require grouping and compress the data as it comes in.

It can be done by building compacted pieces (Objects) of the information, by gzipping remarkably in. The ingester segment is a stateful part accountable for building and afterward flushing the pieces. It is achievable by building a ring of ingesters and utilizing predictable hashing. At the point when a section comes in, the Distributor hashes the marks of the logs and afterward looks into which ingester to send the passage to dependent on the hash value.

Ingestor

Ingestor receives entries and starts building those objects. It gzips the logs and appends them and once those pieces start to fill up, it flushed them to the database. After flushing, the Ingestor creates a new empty object and add new entries into it.

Querier

Querier is used to read the entries. For each query, Querier grasps all the relevant entries to showcase in DataVision. Also, it can deal with a large number of queries instantaneously.

Making it scalable

It can be done in the following ways:

- Putting the compact pieces into a scalable object store

- Putting the index into Cassandra/BigTable/DynamoDB

- Distributors and Queriers are horizontally scalable

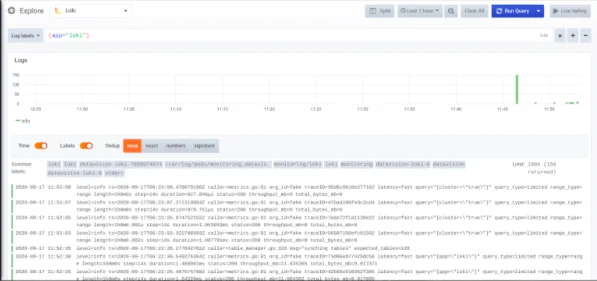

Loki in DataVision

We’ve integrated & enabled Loki in DataVision. So now you’ll be able to see all your logs & Dashboards in one place.

Here there are different types of logs available so you can track what is happening at each level. For example – Logs for the different types of containers, pods, applications, charts, namespaces etc.

You can also write your own query in LogQL to see the logs. Besides this, there is also an option of Live Tailing the logs or updating the logs after every certain time, viewing logs for a particular date.